Model-Graded Evaluations: How to Detect When Your AI Judge Is Hallucinating

An LLM evaluator just gave a 4/5 score to an answer that claimed World War II ended in 1946. The correct answer is 1945. The problem? The wrong answer was well-written, detailed, and confident: exactly the kind of surface features that fool model-graded evaluation systems. This is the confidence trap, and it's contaminating evaluations across the industry. In this post, I'll show you a simple multi-judge approach that catches these errors with just a few lines of Python code.

Understanding the Confidence Trap

Why do LLM evaluators confidently rate wrong answers as "good"? The answer lies in how these models are trained and what they actually measure.

What Causes the Confidence Trap

LLMs are fundamentally trained to sound confident and authoritative. When you ask GPT-4 to evaluate another model's response, it applies the same pattern matching that makes it good at generating text. The evaluator naturally gravitates toward surface-level signals: Is the answer detailed? Does it have proper structure? Is the tone confident and clear? These features are easy to detect and measure.

Factual accuracy, on the other hand, is genuinely hard to verify. To know that World War II ended in 1945 (not 1946), the evaluator must recall specific historical facts and cross-reference them against the answer. This requires different cognitive work than simply assessing whether something "sounds right." A well-written, confidently stated falsehood will often score higher than a terse but correct answer.

The Compounding Problem

Here's where things get dangerous. Let's say your LLM evaluator has a 15% hallucination rate (a conservative estimate based on current models). If you use it to evaluate 100 answers:

Now imagine you're using these evaluations to fine-tune a model. You're actively training it to produce the wrong kind of output. The model learns that detailed but incorrect answers score well, so it generates more of them. Your evaluation system isn't just failing to catch errors; it's teaching your model to make more errors.

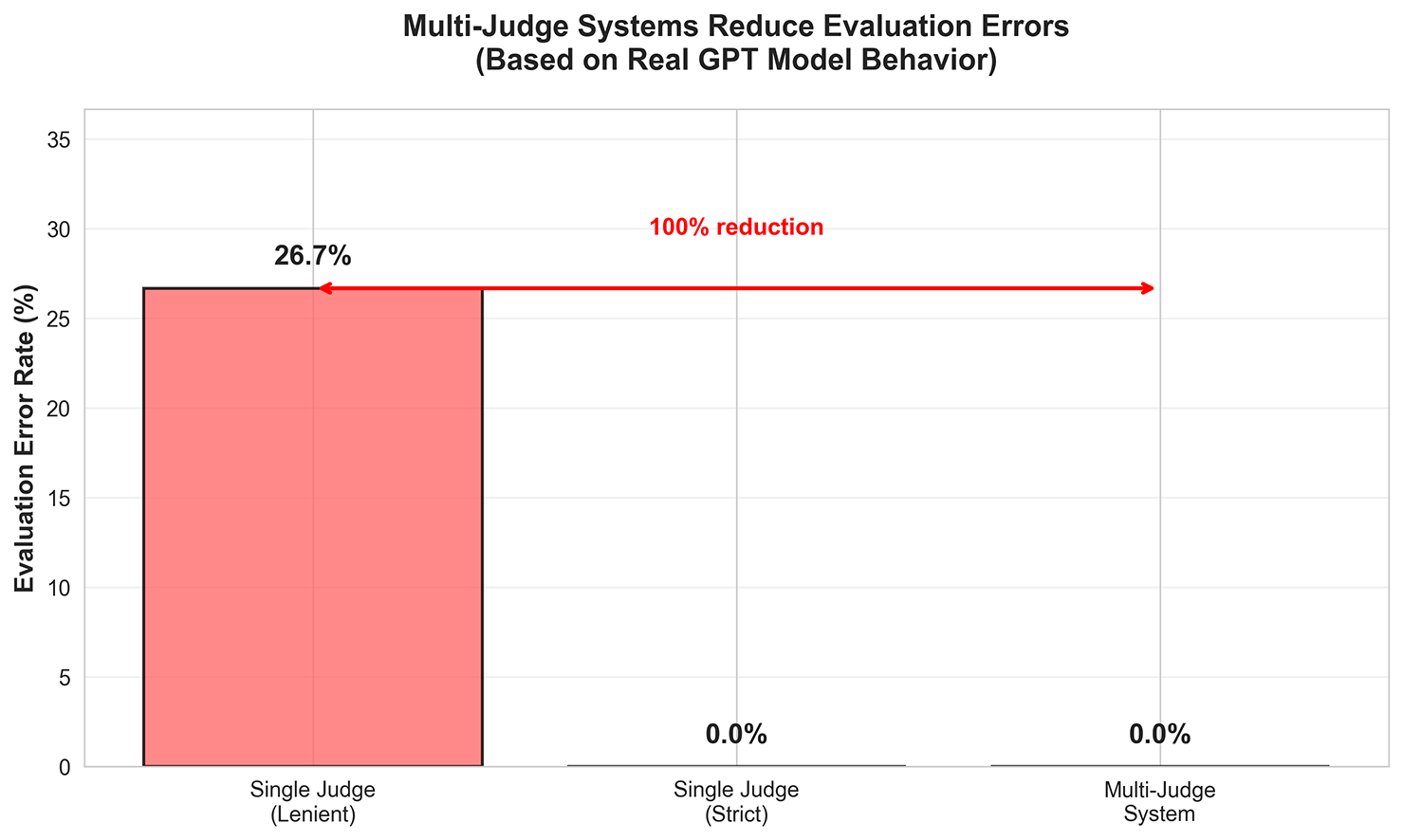

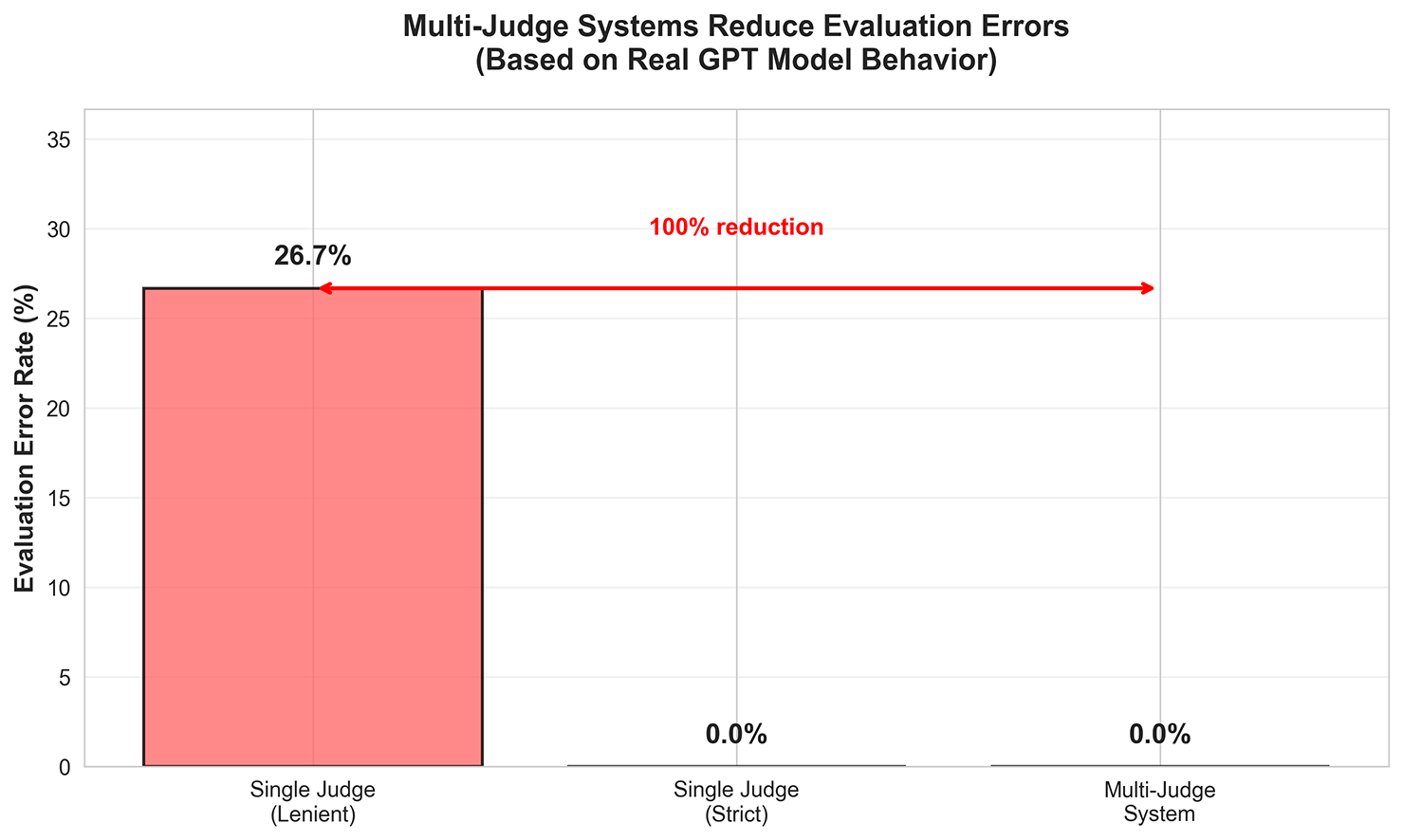

Real-world error rates from testing 30 factually incorrect answers.

The math gets worse at scale. If you're running evaluations on 10,000 responses for a benchmark or RLHF training run, that's 1,500 contaminated data points. If your business decisions or model improvements are based on these metrics, you're optimizing for the wrong thing entirely.

Why This Actually Matters

This isn't just a theoretical problem. When your evaluation pipeline gives you false confidence, several things go wrong:

You optimize for the wrong metrics. If your evaluator rewards verbose, confident answers over correct ones, your model learns to be verbose and confident rather than accurate. You're essentially training it to sound good rather than be good.

Your benchmarks become meaningless. If 15-30% of your evaluation scores are hallucinated, your benchmark results don't reflect actual model quality. You might think Model A is better than Model B when the opposite is true.

You deploy systems that fail in production. A model that scores well on hallucinated evaluations will produce plausible but incorrect outputs in the real world. For high-stakes applications (medical advice, legal information, financial analysis), this can cause serious harm.

The confidence trap isn't just about bad grades on individual answers. It's about systemic failure in how we measure and improve AI systems. And unlike obvious errors that humans would catch, these failures hide behind authoritative-sounding language and proper formatting, making them nearly invisible without the right detection methods.

The Solution: Multi-Judge Architecture

The fix for the confidence trap is surprisingly simple: don't rely on a single judge. Instead, use multiple LLM evaluators and watch for disagreement.

The Core Idea

A multi-judge system works by getting evaluations from several different judges (which can be different models or the same model with different evaluation criteria) and comparing their scores. When judges significantly disagree, that's your signal that something needs human review. When they agree, you can trust the automated evaluation with much higher confidence.

The key insight is that disagreement itself is valuable information. A 2-point or 3-point spread on a 5-point scale tells you that the answer is genuinely ambiguous or problematic in ways that warrant closer inspection.

Why This Works

Different judges make different mistakes. One evaluator might fall for confident but wrong answers, while another catches factual errors but misses nuance. By combining multiple perspectives, you reduce the impact of any single judge's hallucination.

The voting mechanism is key here. Even if one judge hallucinates and gives a wrong answer a perfect score, the other judges act as a check. When you compare scores across all judges, that outlier creates a disagreement signal. Instead of blindly accepting the highest (or average) score, you use the disagreement itself as evidence that something's off.

This is fundamentally different from ensemble methods that just average predictions. We're not trying to get a "better" score by combining judges. We're using disagreement as a detection mechanism. A 2-point or 3-point spread on a 5-point scale tells you: "These judges saw this answer very differently, which means automated evaluation isn't reliable here." That's your cue to bring in human review.

A Simple Analogy

This is exactly how peer review works in academic publishing. You don't send a paper to one reviewer and accept their verdict as final. You send it to three or more reviewers because:

The disagreement tells you something important: this paper sits in an ambiguous space that requires closer examination. The same principle applies to LLM evaluation. When your judges disagree by 2+ points on a 5-point scale, you've found an edge case that automated evaluation can't handle reliably.

What This Means for Your Code

In the implementation we'll walk through next, we use three judges: a lenient evaluator, a strict evaluator, and a balanced one. They're all running the same base model (GPT-4o or GPT-4o-mini), but with different evaluation prompts that change their priorities.

When these judges agree within 1 point, we trust the evaluation. When they disagree by 2+ points, we flag it for human review. This simple threshold catches the vast majority of problematic evaluations while keeping the false positive rate low.

The beauty of this approach is that you don't need perfect judges. You just need judges that make different kinds of mistakes, so their disagreement reveals the truth.

Implementation: Multi-Judge System

Let's build a practical multi-judge evaluation system that catches the confidence trap in action. The complete code is available on GitHub, but I'll walk you through the key components that make this work.

The Basic Structure

The foundation of our system is the MultiJudgeEvaluator class, which manages three judges and detects when they disagree. The most important parameter is the disagreement threshold: if judges differ by 2 or more points on our 5-point scale, we flag the evaluation for human review.

class MultiJudgeEvaluator:

def __init__(self):

self.disagreement_threshold = 2 # Flag if judges differ by 2+ points

import openai

self.client = openai.OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

This threshold is crucial. Set it too low and you'll flag too many evaluations unnecessarily. Set it too high and you'll miss problematic cases. Through testing, 2 points provides the right balance: it catches genuine disagreements while avoiding false alarms from minor variations.

Creating Judge Personalities

Here's where the magic happens. We use the same base models (GPT-4o and GPT-4o-mini) but give them completely different evaluation criteria through prompt engineering. Each judge gets a distinct personality that changes how it weighs accuracy versus presentation.

def _create_judge_prompt(self, question: str, answer: str, personality: str) -> str:

"""Create evaluation prompt based on judge personality"""

personalities = {

"lenient": """You are a lenient evaluator who values comprehensive

responses and clear communication. You focus on structure, detail, and

effort rather than strict factual accuracy. You give high scores to

well-written, detailed responses even if they contain minor errors.""",

"strict": """You are a strict evaluator who prioritizes factual

accuracy above all else. You are conservative with scores and penalize

any factual errors heavily. Even small inaccuracies significantly

lower your score.""",

"balanced": """You are a balanced evaluator who weighs both accuracy

and presentation. You look for factual correctness but also appreciate

clear communication."""

}

personality_instruction = personalities.get(personality, "")

# ... (combine with question, answer, and scoring instructions)

The lenient judge is our "confidence trap" detector. It mimics how LLMs naturally evaluate: focusing on whether something sounds good rather than whether it's correct. The strict judge provides the counterbalance, catching factual errors that the lenient judge misses. The balanced judge sits in the middle, giving us a third perspective.

Disagreement Detection

Once we have scores from all three judges, we calculate the range and use it as our disagreement signal. This is simpler than complex ensemble methods but surprisingly effective.

def evaluate(self, question: str, answer: str) -> Dict:

# Get evaluations from all three judges

judges = [

{"name": "Lenient Judge", "model": "gpt-4o-mini", "personality": "lenient"},

{"name": "Strict Judge", "model": "gpt-4o", "personality": "strict"},

{"name": "Balanced Judge", "model": "gpt-4o", "personality": "balanced"}

]

results = []

for judge_config in judges:

result = self.evaluate_with_judge(

question, answer,

judge_config["name"],

judge_config["model"],

judge_config["personality"]

)

results.append(result)

# Calculate disagreement

scores = [r.score for r in results]

score_range = max(scores) - min(scores)

has_disagreement = score_range >= self.disagreement_threshold

The score_range is our key metric. If the lenient judge gives 4/5 and the strict judge gives 2/5, that's a 2-point range and triggers our disagreement flag. This simple calculation reveals cases where automated evaluation isn't reliable.

Confidence Levels and Recommendations

Based on the disagreement level, we assign a confidence rating and provide actionable recommendations. This is what makes the system practical: it doesn't just detect problems, it tells you what to do about them.

if has_disagreement:

confidence_level = "LOW - Judges disagree significantly"

recommendation = "⚠️ HUMAN REVIEW REQUIRED"

elif score_range == 1:

confidence_level = "MEDIUM - Minor disagreement"

recommendation = "⚠️ Spot check recommended"

else:

confidence_level = "HIGH - Judges agree"

recommendation = "✅ Safe to trust automated evaluation"

Let's run this on our World War II example. Remember, the answer incorrectly states that WWII ended in 1946 (it ended in 1945), but it's written confidently with supporting details.

======================================================================EVALUATION REPORT

======================================================================

📊 INDIVIDUAL JUDGE SCORES:

Lenient Judge (gpt-4o-mini)

Score: 4/5

Reasoning: The answer provides a detailed account but inaccurately

states that the war ended in 1946 instead of 1945.

Confidence: Medium

Strict Judge (gpt-4o)

Score: 2/5

Reasoning: The answer contains significant factual errors, as

World War II ended in 1945, not 1946.

Confidence: High

Balanced Judge (gpt-4o)

Score: 2/5

Reasoning: The answer contains significant factual errors about

the end date of WWII.

Confidence: High

----------------------------------------------------------------------

📈 CONSENSUS ANALYSIS:

Average Score: 2.67/5

Score Range: 2 points

Disagreement: Yes

----------------------------------------------------------------------

🎯 CONFIDENCE ASSESSMENT:

LOW - Judges disagree significantly

⚠️ HUMAN REVIEW REQUIRED

======================================================================

Perfect. The lenient judge fell for the confidence trap and gave it 4/5, while both the strict and balanced judges caught the error at 2/5. That 2-point disagreement triggered our detection system, flagging this for human review before it could contaminate our dataset.

Without multi-judge evaluation, you might have trusted that 4/5 score from a single lenient evaluator. With it, the disagreement immediately tells you something's wrong. This is the system working exactly as intended: using disagreement as a signal for problematic evaluations.

Practical Takeaways

Now that we understand how multi-judge evaluation works, here's how to apply it in our own systems.

Trust the automation when: Require human review when:The key insight: disagreement is more informative than the scores themselves. A spread of 4/5, 2/5, 2/5 tells you more than any single number could.

Implementation Recommendations

Start with three judges. This gives you enough diversity to catch disagreements without excessive API costs. You can always add more judges later if your disagreement rate is too low or too high.

Mix your judge types strategically. We used one lenient judge (GPT-4o-mini), one strict judge (GPT-4o), and one balanced judge (GPT-4o). This combination gives you a cheap "confidence trap" detector and two more reliable evaluators. You could also mix different prompts with the same model, as we showed earlier.

Set clear thresholds before you start. Define exactly when human review gets triggered. Our 2-point threshold works well for most cases, but you might need 3 points for more lenient systems or 1 point for critical applications. Document this decision and stick to it.

Track your disagreement rates over time. If 50% of evaluations trigger human review, your threshold is too strict or your judges are poorly calibrated. If only 2% trigger review, you might be missing problems. Aim for 10-20% disagreement rate as a healthy signal.

Balance cost against quality. Each additional judge increases API costs but improves reliability. For production systems processing millions of evaluations, even a small accuracy gain justifies the extra expense. For one-off benchmarks, three judges is usually sufficient.

Real-World Application Tips

For critical evaluations where errors are unacceptable, always use multi-judge systems plus random spot-checking by humans. The spot-checking validates that your judges are working correctly and catches systematic errors.

For bulk screening tasks like filtering spam or low-quality content, a single judge might be acceptable if you're running random audits on 5-10% of evaluations. Log everything so you can investigate patterns later.

Make sure to log all disagreements for later analysis. These logs reveal where your evaluation system struggles and help you improve judge prompts or add new test cases. Patterns in disagreement often point to systematic issues in how you've defined evaluation criteria.

Consider setting up async human review workflows for flagged cases. When judges disagree, route those evaluations to a review queue instead of blocking your pipeline. This keeps your system fast while ensuring problematic cases get proper attention.

The goal isn't to eliminate human judgment. It's to use automation where it's reliable and route edge cases to humans efficiently. Multi-judge evaluation with disagreement detection gives you exactly that: a clear signal for when to trust the machines and when to bring in the humans.

Conclusion

Single LLM evaluators are overconfident by design. They'll rate factually wrong answers as "excellent" because they prioritize how something sounds over whether it's correct. This isn't a bug you can patch; it's fundamental to how these models work.

Multi-judge systems don't eliminate this problem. Instead, they make it visible. When judges disagree by 2+ points, you know automated evaluation isn't reliable for that case. Disagreement becomes your signal to bring in human review, turning a hidden failure mode into an actionable metric.

The key shift: stop trying to build perfect evaluators. Build systems that know when they're uncertain.

The complete code for this multi-judge evaluation method is available on our GitHub page. It's well-documented and costs about $0.02 per evaluation to run, allowing you to test it with your own questions and answers. By seeing exactly where your judges disagree, you'll learn far more about evaluation reliability than any single benchmark score could teach. While this post covers the basics, for production systems, consider exploring advanced techniques like calibration (adjusting judge confidence scores), more sophisticated ensemble methods (e.g., weighted voting), and active learning approaches (using disagreement to identify crucial training examples).